LLM-Driven UI Rendering with Quarkus, Langchain4j & Ollama

Build a Java Application where the LLM decides how the UI should look (with a safe JSON fallback).

Ever wondered what happens when your UI starts listening to your LLM?

In this hands-on tutorial, you’ll build a Quarkus web application that lets a local Large Language Model (LLM), powered by Ollama and Langchain4j, dictate how information should be rendered on screen. And we do this by embedding structured rendering hints in its tool responses.

This architecture decouples what is shown from how it’s shown, turning your LLM into a frontend-aware assistant. If a renderer doesn’t exist yet, your app will gracefully fall back to displaying the raw JSON so you never lose visibility.

I wanted to do this tutorial since a while now and have been experimenting with various ways to implement it. So, despite the awesome result in the end, there’s a ton of learning in here about what local llms are capable and what pitfalls you will encounter.

What You’ll Need

Make sure you have the following:

Java 17+ and Maven 3.8+

Ollama installed and a model like

llama3.2:3bandqwen3:8bpulledQuarkus CLI or the code.quarkus.io generator

A web browser, terminal, and your favorite IDE

Project Setup

We start by scaffolding a new Quarkus app and configuring the Ollama and model integration. This time we are using the Quarkus CLI. Feel free to use Maven or Gradle if you like. You can also jump ahead to my Github repository and download the complete thing.

quarkus create app com.example:dynamic-renderer \

--extension='rest,rest-jsonb,langchain4j-ollama'

cd dynamic-rendererOllama + Langchain4j Configuration

With Quarkus you can run a local model using Ollama in three ways. Either with natively installed Ollama, a locally running Ollama container on your machine (maybe with something like Ramalama) or through a Dev Service container. We are using the first way here. Let’s pull the models locally first.

ollama pull llama3.2:3b

ollama pull qwen3:8bChoosing the Right Local Models

The key to this tutorial is choosing the right local models. We are going to work with two. A “Researcher” model and a “Renderer” model. A two-step pipeline keeps your AI honest. The Researcher hunts for facts and writes the answer, nothing else. The Renderer then reshapes that text into the exact JSON your UI expects. Each model gets a single, clear brief, so you sidestep tangled prompts and malformed payloads. Need to tweak the front-end? Adjust the Renderer prompt and ship; the content engine stays untouched.

The "Researcher" AI is goint to use a search tool and do the heavy lifting of curating information about a user question: This model needs to be good at understanding instructions and, crucially, at "function calling" or "tool use." It needs to recognize when your SearchTool is required and generate the correct request for it.

I went with Meta's llama3.2:3b model. The llama family has shown excellent instruction-following and reasoning capabilities out of the box. The 3-billion parameter version is the sweet spot for performance vs. hardware requirements. It's fast, has native support for tool use, and is widely supported. It's more than capable of handling the synthesis task.

The "Renderer" AI has exactly one task and that is to deliver reliable JSON Output: This model's primary job is format adherence. It must reliably produce a clean JSON object based on the system prompt. Larger models are generally better at this, as they can follow complex structural constraints more accurately.

I am using qwen3:8b here because it gave me the best and most reliable responses. General observation is, that the smaller models are not super reliable when it comes to producing the desired JSON output. The only way around this is to use much larger models in the 70B range. They are a massive leap in capability but also require significant more resources.

Configuring Ollama with Quarkus and Langchain4j is done in src/main/resources/application.properties:

# Researcher

quarkus.langchain4j.ollama.researcher.chat-model.model-id=llama3.2:3b

quarkus.langchain4j.ollama.researcher.chat-model.temperature=0.8

quarkus.langchain4j.ollama.researcher.timeout=60s

quarkus.langchain4j.ollama.researcher.log-requests=true

quarkus.langchain4j.ollama.researcher.log-responses=true

# renderer

quarkus.langchain4j.ollama.renderer.chat-model.model-id=qwen3:8b

# Lower temperature for more deterministic JSON output

quarkus.langchain4j.ollama.renderer.chat-model.temperature=0.1

quarkus.langchain4j.ollama.renderer.chat-model.format=json

quarkus.langchain4j.ollama.renderer.timeout=120s

quarkus.langchain4j.ollama.renderer.log-requests=true

quarkus.langchain4j.ollama.renderer.log-responses=trueNote: We do lower the temperature for the Renderer to be as precise as possible, while the Researcher get’s to stay a little more creative.

About Schema Mapping, Guardrails, and JSON parsing

When you look at the code of the two AI Services below you will see, that we are using return type String in both and do not try to use Langchain4j’s schema mapping capabilities. While it technically is possible and works with comparably simple POJO constructs, it is still challenging for deeper and nested result hierarchies. Below you can now basically see three different approaches to “sanitizing” LLM return text:

The Renderer is prompted to return strict JSON. It has a JsonGuardrail attached that tries to validate the output and reprompts the LLM if it isn’t valid JSON. This way, we can guarantee that the output is valid JSON or the request fails.

The LearningResource tries to parse the JSON into a couple of complex POJOs (model/**) and if that fails, returns the valid JSON from the LLM. This path is a relict from my first tries with complex AIService return types. You can experiment with having the Renderer return a RenderedResponse list. But I could not get this to work reliably. So, technically the model isn’t necessary for this tutorial at all, but I wanted to keep it to share the learning.

The Model Classes and Jackson

Even if we don’t need the full blown model, you can find it in the model package.

The model package is structured around a hierarchy of UI elements, beginning with the RenderedResponse class: RenderedResponse: This is the top-level class that holds a list of UIElement objects. It represents the full response to be rendered and provides a toString() method for pretty-printing as JSON.

UIElement (abstract): This is the base class for all UI elements. It uses Jackson annotations to support polymorphic deserialization based on the renderHint property. Subclasses include:

TextElement: Contains a Data inner class with title and text fields.

BookElement: Contains a Data inner class with title and author fields.

and further subclasses depending on UI components.

If you want to take a look, it’s in my Github repository. I am not going to describe it further here.

The Tools - Search and JSON Guardrail

I have used a search engine in another post. This time we are going to use a super simple approach with Jsoup. Add the following dependency to your pom.xml

<dependency>

<!-- jsoup HTML parser library @ https://jsoup.org/ -->

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.20.1</version>

</dependency>And create the file src/main/java/com.example/tool/LearningMaterialTools.java

package com.example.tool;

import java.io.IOException;

import org.jsoup.Jsoup;

import dev.langchain4j.agent.tool.Tool;

import jakarta.enterprise.context.ApplicationScoped;

@ApplicationScoped

public class LearningMaterialTools {

@Tool("Perform a web search to retrieve information online")

String webSearch(String q) throws IOException {

String webUrl = "https://html.duckduckgo.com/html/?q=" + q;

String text = Jsoup.connect(webUrl).get().getElementsByClass("results").text().substring(0, 2000);

return text;

}

}It basically does a duckduckgo search for a string. We are using this later in the Researcher AIService.

Now we need the Langchain4j guardrail. Create the file src/main/java/com.example/tool/JsonGuardrail.java

package com.example.tool;

import com.fasterxml.jackson.databind.ObjectMapper;

import dev.langchain4j.data.message.AiMessage;

import io.quarkiverse.langchain4j.guardrails.OutputGuardrail;

import io.quarkiverse.langchain4j.guardrails.OutputGuardrailResult;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.inject.Inject;

@ApplicationScoped

public class JsonGuardrail implements OutputGuardrail {

@Inject

ObjectMapper mapper;

@Override

public OutputGuardrailResult validate(AiMessage responseFromLLM) {

try {

mapper.readTree(responseFromLLM.text());

} catch (Exception e) {

return reprompt("Invalid JSON", e, "Make sure you return a valid JSON object");

}

return success();

}

}It does implement the OutputGuardrail interface and is automatically called to sanitize the output of the Renderer.

AIServices - Researcher and Renderer

Now that we have the tools and basics in place, it is time to implement the Researcher and Renderer: Create the file src/main/java/com.example/service/LearningAssistant.java

package com.example.service;

import com.example.tool.JsonGuardrail;

import com.example.tool.LearningMaterialTools;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

import io.quarkiverse.langchain4j.guardrails.OutputGuardrails;

public interface LearningAssistant {

@RegisterAiService(tools = LearningMaterialTools.class, modelName = "researcher")

interface Researcher {

@SystemMessage("""

...

You are a helpful assistant that recommends high-quality

learning materials. Your task is to provide a concise summary

in response to the user's topic or question, followed by a

curated list of relevant books, podcasts, articles, or other resources.

Use the webSearch tool to find up-to-date and trustworthy sources.

Prioritize clarity, relevance, and usefulness.

Always include links when possible, and ensure your tone

remains professional and helpful.

""")

String research(@UserMessage String question);

}

@RegisterAiService(modelName = "renderer") // Corresponds to 'quarkus.langchain4j.openai.renderer.*'

@OutputGuardrails(JsonGuardrail.class) // Ensure the output is valid JSON

interface Renderer {

@SystemMessage("""

...

Your final output must be a single JSON object with one key, \"elements\", which contains the JSON array.

Each element in the array must be an object with two keys: \"renderHint\" (must be one of: 'text', 'book', 'podcast', 'list', 'website') and \"data\" (an object containing the actual content).

Data structure for each renderHint:

- 'text': { "title": string, "text": string }

- 'book': { "title": string, "author": string }

- 'podcast': { "title": string, "description": string }

- 'list': { "title": string, "items": array of string }

- 'website': { "title": string, "url": string }

Example: { "elements": [ { "renderHint": "text", "data": {"title": "...", "text": "..."} } ] }

Output ONLY the raw JSON object and nothing else.

Do not use any renderHint other than those listed above.

""")

String render(@UserMessage String inputText);

}

}The LearningAssistant interface defines two AI service contracts:

The Researcher provides concise summaries and curated lists of learning resources in response to user questions. It can also use the

tools = LearningMaterialTools.classThe Renderer transforms this information into a structured JSON format, ensuring the output matches a specific schema for rendering UI elements. It has the

@OutputGuardrails(JsonGuardrail.class)attached.

Both are using different models (modelName) as configured in the application.properties.

REST Endpoint - Talking to our AIServices

Next step is to expose the AIServices and tools and magic to the world.

Create the file src/main/java/com.example/LearningResource.java

package com.example;

import org.jboss.logging.Logger;

import com.example.model.RenderedResponse;

import com.example.service.LearningAssistant;

import com.fasterxml.jackson.databind.ObjectMapper;

import jakarta.inject.Inject;

import jakarta.ws.rs.Consumes;

import jakarta.ws.rs.FormParam;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

@Path("/")

public class LearningResource {

@Inject

LearningAssistant.Researcher researcherAI;

@Inject

LearningAssistant.Renderer rendererAI;

@Inject

ObjectMapper objectMapper;

private static final Logger LOG = Logger.getLogger(LearningResource.class);

@POST

@Path("/ask")

@Consumes(MediaType.APPLICATION_FORM_URLENCODED)

@Produces(MediaType.APPLICATION_JSON)

public String ask(@FormParam("question") String question) throws Exception {

// 1. Call the Researcher AI to get the content

String researchResult = researcherAI.research(question);

// 2. Call the Renderer AI to structure the content

String jsonResponse = rendererAI.render(researchResult);

LOG.infof("RAW JSON from Renderer: %s", jsonResponse);

// 3. Parse the JSON into our POJOs

RenderedResponse renderedResponse = null;

try {

// A simple way to clean potential markdown ```json ``` wrapper

// String cleanJson = jsonResponse.replace("```json", "").replace("```",

// "").trim();

renderedResponse = objectMapper.readValue(jsonResponse, RenderedResponse.class);

// LOG.infof("Parsed JSON: %s", jsonResponse);

if (renderedResponse != null && renderedResponse.elements != null) {

for (com.example.model.UIElement element : renderedResponse.elements) {

LOG.infof("Element class: %s, renderHint: %s",

element == null ? "null" : element.getClass().getName(),

element == null ? "null" : element.renderHint);

}

}

} catch (Exception e) {

LOG.errorf("Failed to parse JSON: " + jsonResponse, e);

// If parsing fails, return the raw JSON response because the Guardrail ensures

// it's valid JSON

return jsonResponse;

}

return renderedResponse.toString();

}

}You can see that we call the Researcher before we hand that result to the Renderer and try to parse the JSON into the model before we fallback to the Guardrail sanitized JSON directly from the Researcher.

What did you do here, Markus and why?

Well. I was ambitious. As a backend guy my initial idea for this tutorial involved implementing it on the server side with qute and template includes. Turns out, this isn’t as easy as I thought it would be and one solution necessary is still waiting to be added as an enhancement. So, I decided to keep it for a future iteration where I might want to add server side rendering to this.

Build the Web Layer

This is a little painful for me but I had to implement the web layer with JavaScript. Well, grab the styles.css from my repository and copy it next to the index.html file into src/main/java/resources/META-INF/resources

The src/main/resources/META-INF/resources/index.html

<!DOCTYPE html>

<html>

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1" />

<title>Ask Quantum – Smart Renderer</title>

<link rel="stylesheet" href="style.css" />

<script type="module">

import { h, render } from "https://esm.sh/preact@10.19.2";

import { useEffect, useState } from "https://esm.sh/preact@10.19.2/hooks";

import htm from "https://esm.sh/htm@3.1.1";

const html = htm.bind(h);

const TextBlock = ({ title, text }) => html`

<section class="fade-in" style="margin-bottom: 1.5rem;">

<h2>${title}</h2>

<p>${text}</p>

</section>

`;

const ListBlock = ({ title, items }) => html`

<section class="fade-in" style="margin-bottom: 1.5rem;">

<h3>${title}</h3>

<ul>

${items.map((item) => html`<li>${item}</li>`)}

</ul>

</section>

`;

const WebsiteLink = ({ title, url }) => html`

<div class="fade-in" style="margin-bottom: 1rem;">

<a href="${url}" target="_blank" rel="noopener noreferrer">

🔗 ${title}

</a>

</div>

`;

const Book = ({ title, url }) => html`

<div class="fade-in" style="margin-bottom: 1rem;">

<a href="${url}" target="_blank" rel="noopener noreferrer">

🔗 ${title}

</a>

</div>

`;

const Podcast = ({ title, url }) => html`

<div class="fade-in" style="margin-bottom: 1rem;">

<a href="${url}" target="_blank" rel="noopener noreferrer">

🔗 ${title}

</a>

</div>

`;

const UnsupportedRender = ({ renderHint, data }) => html`

<div class="fade-in" style="margin-bottom: 1rem;">

<h3>${renderHint}</h3>

<pre style="font-size: 0.9rem;">${JSON.stringify(data, null, 2)}</pre>

</div>

`;

const Renderer = ({ elements }) => html`

<div>

${elements.map(({ renderHint, data }) => {

switch (renderHint) {

case "text":

return html`<${TextBlock} ...${data} />`;

case "list":

return html`<${ListBlock} ...${data} />`;

case "website":

return html`<${WebsiteLink} ...${data} />`;

case "book":

return html`<${Book} ...${data} />`;

case "podcast":

return html`<${Podcast} ...${data} />`;

default:

return html`<${UnsupportedRender} renderHint=${renderHint} data=${data} />`;

}

})}

</div>

`;

const App = () => {

const [elements, setElements] = useState(null);

const [question, setQuestion] = useState("");

const [loading, setLoading] = useState(false);

const [error, setError] = useState(null);

const submitQuestion = (e) => {

e.preventDefault();

setLoading(true);

setError(null);

setElements(null); // ← Clear previous results

const formData = new URLSearchParams(); // ← declare it here

formData.append("question", question);

fetch("http://localhost:8080/ask", {

method: "POST",

headers: {

"Content-Type": "application/x-www-form-urlencoded",

},

body: formData.toString(),

})

.then((res) => {

if (!res.ok) throw new Error("Failed to fetch response");

return res.json();

})

.then((data) => {

setElements(data.elements);

setLoading(false);

})

.catch((err) => {

setError(err.message);

setLoading(false);

});

};

return html`

<div>

<header>

<h1>Ask The Llama</h1>

<p>A smarter way to explore things, ideas, and knowledge – powered by AI</p>

</header>

<form onSubmit=${submitQuestion}>

<input

type="text"

name="question"

size="50"

placeholder="Ask a question about quantum physics..."

value=${question}

required

onInput=${(e) => setQuestion(e.target.value)}

/>

<button type="submit">Ask</button>

</form>

${loading && html`<p class="loading"><span class="dot">.</span></p>`}

${error && html`<p class="error">${error}</p>`}

${elements && html`<${Renderer} elements=${elements} />`}

</div>

`;

};

render(html`<${App} />`, document.body);

</script>

</head>

<body style="font-family: sans-serif; padding: 2rem;"></body>

</html>The index.html file provides the frontend for the application, allowing users to submit questions and view AI-generated answers. It uses Preact and HTM to create a dynamic, interactive UI: users enter a question in a form, which sends a request to the backend (/ask endpoint). The response, containing structured elements like text, lists, books, podcasts, and websites, is rendered on the page using dedicated components for each type. The page also handles loading and error states, offering a smooth user experience.

This is basically it. Two tools, two services, one restful endpoint and a little bit of html.

Run and Test

Start Quarkus in Dev Mode:

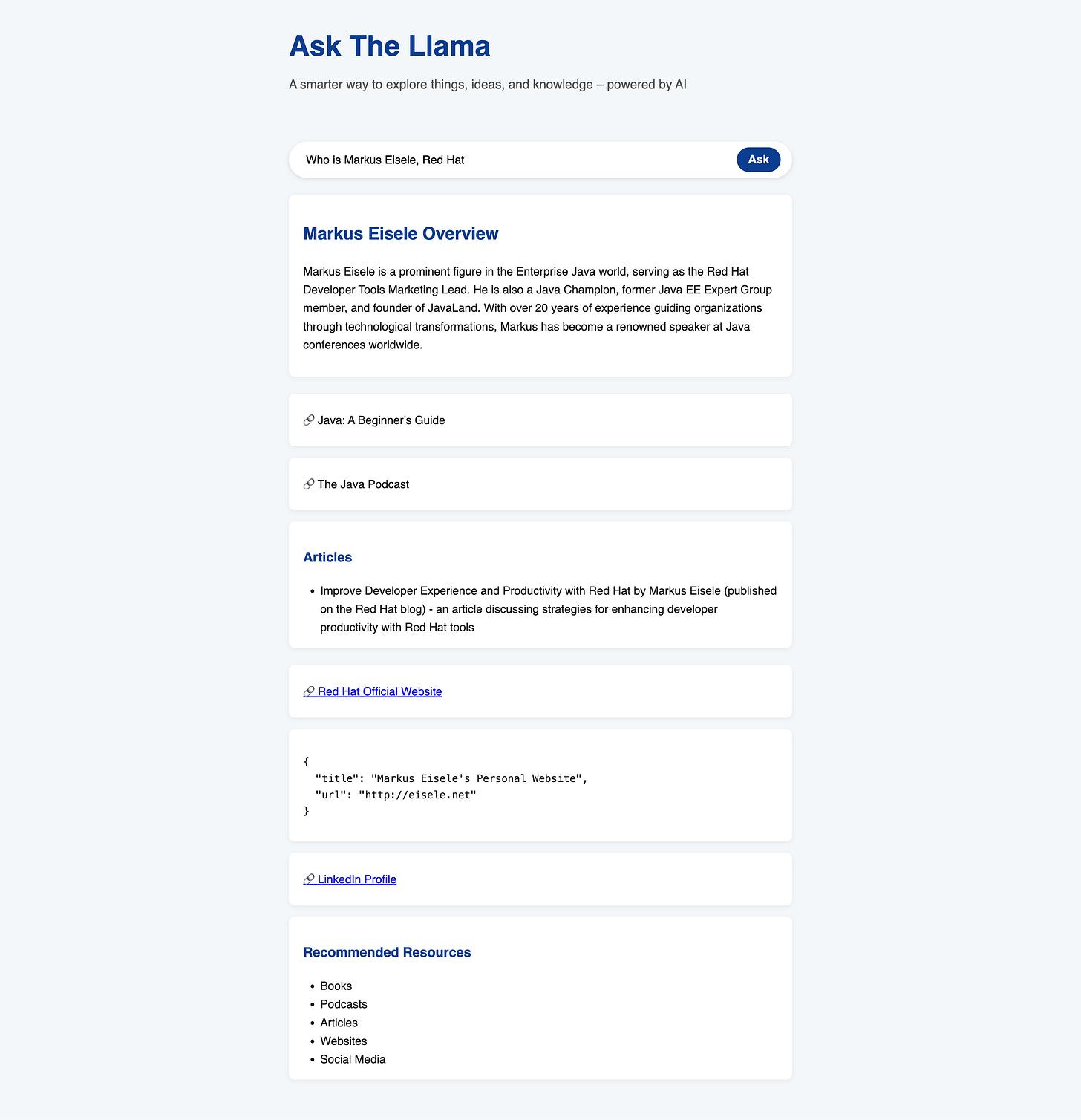

./mvnw quarkus:devTry this query:

Who is Markus Eisele, Red HatAnd see the magic coming to live.

Key Takeaways

The You can not force a model to do too much.

Isolate generation from formatting, wire each piece to a single-purpose model, put a guardrail on every external boundary,

Qute can not conditionally include templates yet. Sigh.

One fallback is good. Two are better.

Adding a new renderer is as easy as updating your index.html

Where to Go From Here

Use JavaScript libraries to render more dynamic components. Charts for example.

Store user renderer preferences

Add multi-turn memory to the assistant

Integrate more external APIs (weather, geolocation, etc.)

Port to a other JavaScript frontends

Final Thoughts

This pattern — LLM as data provider + renderer hint — is a powerful way to let AI drive your user interface without handing over full control. It strikes a healthy balance between smart suggestions and safe rendering, all wrapped in a fast, dev-friendly Quarkus app.

Happy rendering, and may your JSON always parse.